By Stoyan Stoyanov. No doubt about it…containers are great! Whereas it could take days (or even weeks!) to provision a physical server, containers allow developers to spin up an application server in a matter of minutes. Containers let development teams put a “bubble” around an application (wrapping the application and the libraries it depends on into a single package), so that it can be turned off, turned on, moved, or replicated as needed – without impacting other servers or applications. Containers allow teams to quickly start up a new instance of the application for testing or to offload visitors from a busy application.

But when the burden of maintaining containers gets to be too much – numerous host updates, delays in provisioning new servers, and outages – developers usually turn to container management solutions.

In IT, where the speed of change is so fast, we are afraid to blink. Nowadays, newcomers to the containers world often have no other option but to start with selecting a container management solution, even before they’ve had a chance to fully understand the ins and outs of containers. And at UCSD, we took the time to do both.

There is a huge plus to being a newcomer. It’s like being a child again and everything is possible. You look at all this exciting technology and you are eager to learn more. Finally! There is something that does make sense; something that can lead to real optimization of system administration work.

We started looking into containers a year ago at UC San Diego. We spun up a Docker Swarm development environment in Amazon Web Services (AWS) and deployed some test workloads. Then, for container management solutions, we briefly looked into the Docker Enterprise Edition. But ultimately, the appeal of the vibrant, open-source community around Kubernetes made it our first choice as a container management solution.

Let’s face it. Kubernetes is simple but complex. Yes, it is simple to deploy, scale up and scale down, load balance, offload SSL, and implement mutual TLS. But for all this to become reality, though, a lot of effort needs to be spent on implementing a resilient, production-ready infrastructure. Since we don’t have unlimited labor, we decided to go with EKS, which is the AWS implementation of Kubernetes.

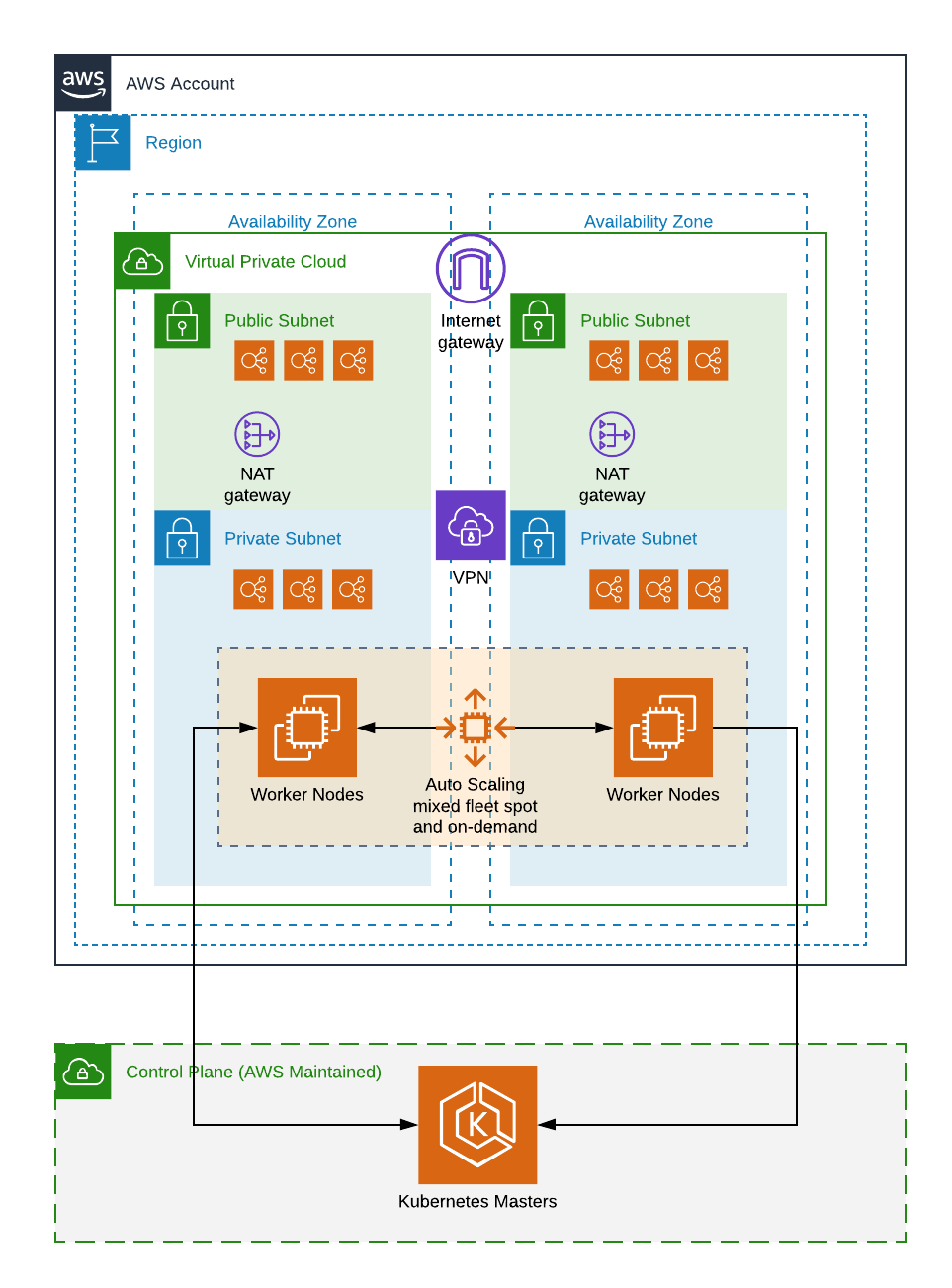

EKS allowed us to concentrate on running the data plane (the worker nodes or servers that the workloads run on). It still requires us to upgrade the Kubernetes versions with EKS every now and then, but that is straightforward. EKS comes with identity and access management integration, which might be relevant to some locations, but we chose to rely exclusively on Kubernetes’ role-based access control authentication instead. This ties in very well with our continuous integration/continuous deployment infrastructure, and allows us to remain cloud-agnostic, so we can potentially switch to another cloud provider or an on-premise Kubernetes cluster. (See the diagram at the end of this article.)

Kubernetes is so flexible that with a little research, we were able to integrate it with our existing infrastructure. Additionally, EKS plays well with our logging system. Preserving the environment the developers are familiar with simplifies the transition to a containerized infrastructure.

Cloud has a big advantage in that the organization does not have to pay high upfront costs. It doesn’t mean that it’s free though. Actually, far from it. When building an infrastructure in the cloud, one should be aware of and account for all the costs that the deployed resources will generate. Running the infrastructure for Kubernetes is complicated and can be expensive. We’ll need to think carefully about how we provide a “batteries-included” developer experience without breaking the bank.

We haven’t figured everything out yet. For example, sharing a virtual private cloud between clusters might not work as well as we had initially thought, proper monitoring and alerting is challenging, and we have yet to find the perfect solution for multitenancy, which is currently an open question in the Kubernetes community. But we ask questions and learn … quickly! Don’t blink, remember?

Stoyan Stoyanov is sr. systems engineer, Information Technology Services, UC San Diego.

Cool! Did you consider using aws fargate service for container deployment?

Hi Eric! Yes, we did. Fargate is great when you have a couple of workloads, but in the long run it becomes expensive. Also, using Fargate would’ve locked us in with AWS to a much greater extent than EKS.