Three panel discussions provided conference attendees the opportunities to hear experts in their respective fields discuss artificial intelligence at it applies to research, education and managing the complexity of implementation, as follows: (1) Research Frontiers with moderator, Theresa Maldonado, UC Office of the President; (2) Pedagogy and Innovation Frontiers with moderator Richard Lyons, UC Berkeley; and (3) Application Frontiers/Operations with moderator Camille Crittenden, UC CITRIS Banatao Institute.

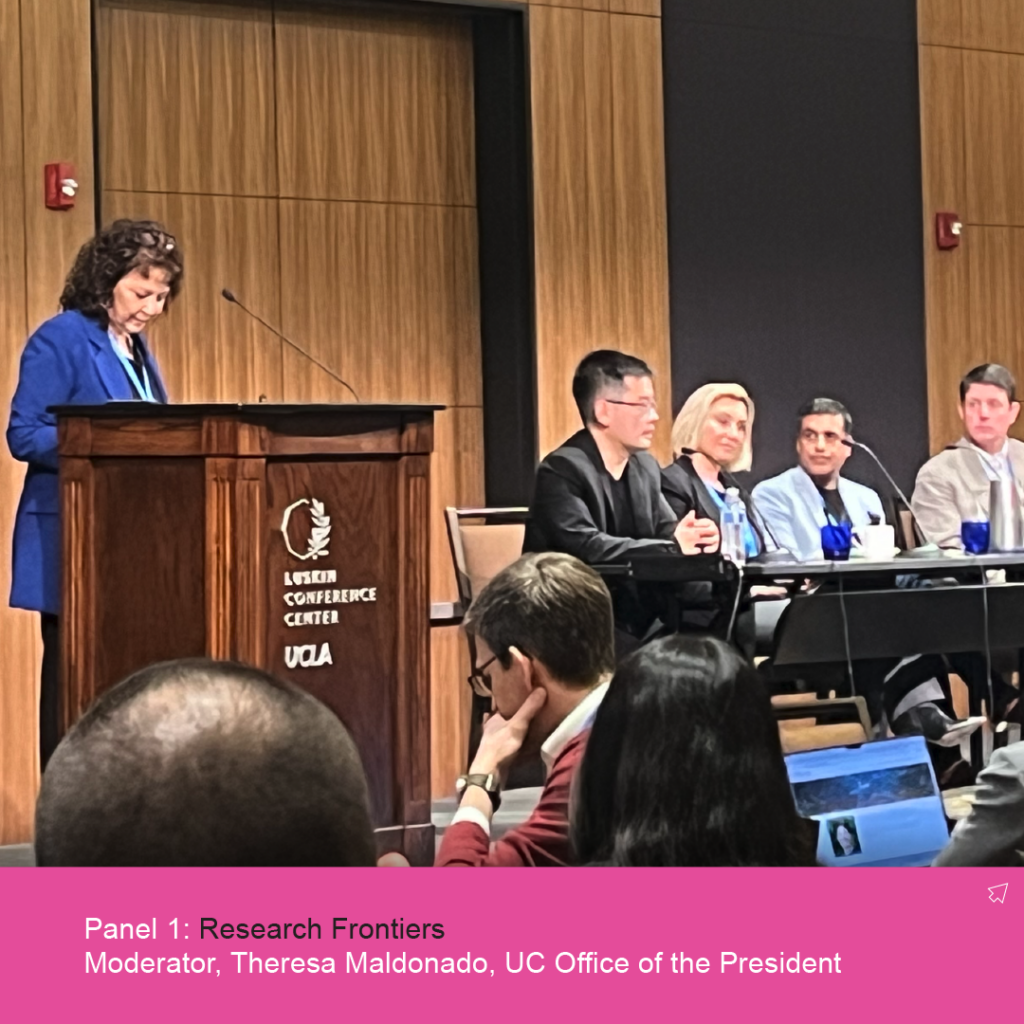

Panel 1: Research Frontiers

Panel Description: UC is home to some of the leading AI researchers in the world, its students are actively engaged in developing, testing and using new AI applications. How can researchers in a range of disciplines harness the promise of AI and high-performance computing to accelerate discoveries while also attending to data security and questions of research integrity? Panelists discussed their research in cutting edge areas of genetics, cybersecurity and real-time monitoring and prediction of wildfire.

Moderator: Theresa Maldonado, UC Office of the President: Vice President for Research and Innovation

Panelists: William Wang, UC Santa Barbara: Director, Center for Responsible Machine Learning; Ilkay Altintas, UC San Diego, San Diego Super Computer: Chief Data Science Officer, UC San Diego Supercomputer Center; Ashish Atreja, UC Davis Health: Chief Information and Digital Health Officer; and David Danks UC San Diego: Professor, Data Science & Philosophy

Key Takeaways of the First Panel:

1. Leverage UC resources: With ten campuses, three national labs, and other entities under UC, panelists emphasized that infrastructure is crucial but expensive. Providing the right level of services around resources ensures sustainability and scalability through increased usage.

2. Cost considerations: Significant costs are associated with implementing large language model platforms like OpenAI at scale. While such tools may be freely available for individuals, enterprise-level usage incurs substantial expenses, emphasizing the need to consider costs in scaling infrastructure.

3. Human investment: Beyond physical infrastructure, panelists emphasized the importance of investing in people. This includes providing training, education, and support for individuals integrating AI into their workflows or teaching.

Panel 2: Pedagogy and Innovation Frontiers

Panel Description: Artificial intelligence promises to transform the academic landscape for teachers and learners, both in the classroom and in entrepreneurial activities. How can UC best leverage advances in AI to boost students’ academic success and provide opportunities for them to explore new career paths in innovation? At the same time, AI accelerators, industry partnerships and supportive tech transfer offices can help to amplify the innovative research happening across the system.

Moderator: Richard Lyons, UC Berkeley: Chief Innovation and Entrepreneurship Officer, Chair, UC President’s Council for Entrepreneurship

Panelists: Jill Miller, UC Berkeley: Professor, Art Practice, Founding Director, Platform Artspace; Rosibel Ochoa, UC Riverside, Associate Vice Chancellor, Technology Partnerships; Brian Spears, Lawrence Livermore National Lab: AI Innovation Incubator; Tamara Tate, UC Irvine: Project Scientist, Digital Learning Lab; Zac Zimmer, UC Santa Cruz: Associate Professor, Literature

Key Take Aways of the Second Panel

The panel discussion on AI and education, featuring experts from several UC campuses and the Lawrence Livermore National Laboratory, delved into the profound consequences and massive scale of the AI revolution, particularly in the context of pedagogy, innovation, and national security. The panelists emphasized the need for academia to keep pace with industry in terms of ambition and scale while critically examining the ethical implications and unintended consequences of AI.

Panelists shared examples of integrating AI into education, such as Jill Miller’s art class that explored the representation of diverse objects in 3D model marketplaces and Zach Zimmer’s writing class that discussed the optimization of writing for AI audiences. Tamara Tate introduced Papyrus AI, a platform that scaffolds the use of generative AI for writing, while Rosibel Ochoa highlighted the challenges of technology transfer and startup support in the rapidly evolving AI landscape.

Brian Spears brought a national security perspective, discussing the dual-use nature of AI technologies, the need for educating people about boundaries and risks, and the massive investments being made by industry. He called for academic institutions to think at the same scale as industry and engage in initiatives like Frontiers in AI for Science and Security Technology (FASST) to transform large-scale science and prepare students for the AI revolution.

The discussion also touched on the future of writing education, with panelists encouraging collaborative creative processes and emphasizing the importance of developing students’ own voices. Accessibility and inclusion were highlighted as critical considerations, with a call to ensure that AI tools can help people with disabilities express themselves in new ways.

Funding and public-private partnerships for AI research emerged as key challenges, with concerns raised about the allocation of resources and the need for a coalition of national labs and universities to secure access to compute power and data for students and faculty. The threat of publishers curtailing rights to text and data mine academic corpora was also discussed, with panelists emphasizing the importance of pushing back against restrictions on data access.

Actionable suggestions for the UC system included implementing an AI literacy requirement to provide students with a foundation in history, ethics, and operational tools, as well as providing university-controlled AI tools that protect privacy and allow for the building of new models. The ultimate goal is to educate students to be literate in AI and empower them to shape the future as they enter the world.

Panel 3: Application frontiers/operations

Panel Description: As artificial intelligence becomes increasingly important in higher education, not only in research and teaching but in the operations of the enterprise itself, it raises novel and critical questions about privacy, intellectual property, creativity, and individual rights and equity. How will universities evaluate and incorporate the rapid advances in AI and assess what the technology means for teaching, research, and healthcare services? What are the implications for higher ed institutions and our role in promoting original research and creative work, upholding principles of equity, and ensuring data security and privacy?

Moderator: Camille Crittenden, CITRIS and the Banatao Institute

Panelists: 1) Lucy Avetisyan, UCLA: Associate Vice Chancellor & Chief Information Officer (CIO); 2) Janet Napolitano, UC Berkeley: Director, Center for Security in Politics; 3) Brandie Nonnecke, CITRIS Policy Lab: Director; and 4) Jonathan Porat, California State: Chief Technology Officer

Key Take Aways of the Third Panel

The Applications Frontiers panel at the AI Congress delved into the responsible deployment of AI technologies, considering unintended consequences and the need to enhance productivity without sidelining marginal populations. Panelists from UCLA, the State of California, the Department of Homeland Security (former Secretary), and the CITRIS Policy Lab shared their experiences and insights on AI governance, risk assessment, and the importance of interdisciplinary collaboration.

Lucy Avetisyan, CIO at UCLA, shared her institution’s journey in exploring AI capabilities, highlighting the challenges faced since the launch of GPT and OpenAI in November 2022. UCLA has taken steps to support its campus community by hosting events with tech giants, raising awareness about data privacy, and planning to launch a number of AI-focused initiatives.

Jonathan Porat, CTO of the State of California, discussed the state’s deliberative approach to implementing generative AI, guided by Governor Newsom’s executive order. The state focuses on guidelines, risk assessment, and outcomes-focused governance, with an emphasis on scalable applications that prioritize workforce support and data privacy.

Janet Napolitano, former UC President and Secretary of Homeland Security, addressed the security implications of AI, warning about the potential for data poisoning and the need for data certification and proactive measures by private actors. Brandie Nonnecke, Director of the CITRIS Policy Lab, cautioned against hyper-focusing on generative AI and neglecting other AI applications. She highlighted the UC Presidential Working Group on AI’s efforts to develop the UC Responsible AI Principles and encouraged leveraging the UC system’s power when working with private sector entities.

During the panel discussion, participants shared promising AI application areas and potential policies or recommendations for the UC system. The panelists also addressed questions from the audience, covering topics such as creating a productive culture around AI, using AI to compare terms and conditions of service, the impact of AI on the job market, validating AI predictions, and engaging the community to identify key AI use cases.

Throughout the panel, the need for responsible AI deployment, considering unintended consequences, educating students, and upskilling the workforce was highlighted. The UC system’s leadership in establishing AI principles and councils, along with its potential to shape AI practices through the “UC effect,” were seen as crucial in navigating the AI revolution. The panelists emphasized the importance of interdisciplinary collaboration, continuous monitoring, and human oversight in ensuring that AI technologies are developed and deployed in a manner that aligns with societal values and promotes the public good.

AI Council update

Alex Bui, Co-Chair of the UC AI Council, provided an overview of the council’s efforts to institutionalize the UC Responsible AI Principles across the system. The council aims to establish a baseline set of principles for AI governance, coordinate with various stakeholders, harmonize definitions across campuses, and provide a central resource for AI-related information through its newly launched website.