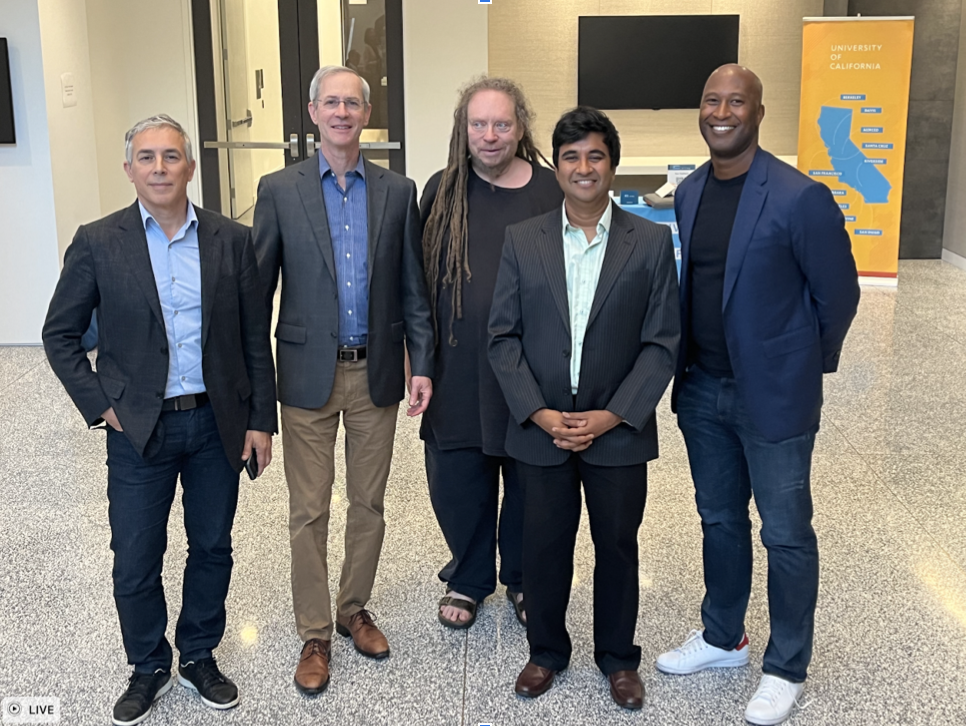

In July 2023, Van Williams, vice president (VP) of Information Technology (IT) and chief information officer (CIO) of the University of California (UC) invited leaders from the UC Office of the President to discuss developments in the field of AI. Mark Nitzberg (UC Berkeley) moderated the discussion, with panelists Americo Carvalho (Amazon), Jaron Lanier (Microsoft), and Pratap Ramamurthy (Google). This 90-minute lunch meeting represented a seminal event, one of the earliest of its kind at UC Office of the President, uniting leaders representing the leading firms in industry and academia to discuss AI. The meeting was the building block for an on-going leadership dialogue on the topic of AI at the University of California, including the recent UC AI Congress held at UCLA earlier this month.

The summary below provides quick takeaways from the UC Tech News team, who attended this short, but rich meeting. The team incorporated their interpretations of the individual responses of the four participants and is eager to share these notes on the topic of AI to help us track the conversation as it develops over time.

What is AI in general and generative AI, and how do its elements come together to affect innovation?

Starting point: What AI is “not”…

Jaron Lanier reminded us that there is no autonomous intent behind AI. He explained that generative AI is not a “thing” (with intent of its own), but rather a product of “mass human collaboration.” It is a tool that can help us clarify and understand the answers to questions based on repeated performance. That is, it culls existing information from a given source (i.e., the internet) and, based on an algorithm, generates a response that often feels like it has an intent behind it. While it feels like a “thing,” it is still the product of mass human collaboration. He emphasized that, contrary to widespread belief, it is not something to be feared. As Lanier explained in a recent New Yorker article, there is no AI, “The most pragmatic position is to think of A.I. (Artificial Intelligence) as a tool, not a creature.”

Traditional AI vs generative AI

Americo Carvalho compared generative AI to traditional AI, explaining that generative AI is a type of AI that can create added content – text, articles, images, and music. What makes it special from traditional AI is the fact that it leverages foundation models, and large language models (LLMs) that can be trained to respond to an infinite variety of prompts.

Why 2023 is considered the onset of a new era: evolution of generative AI into text

Pratap Ramamurthy provided a historical perspective: Why was 2023 important? Generative AI has been around for several years and started by allowing the creation of images, called deepfakes, that looked almost real. The ability to generate text was a bigger problem than images because the model would need to “understand” the essence of words. The word “natural language” is anything but “natural.” Think about images – they are already existing and are blended to create something “new.” However, sentences do not pre-exist the way photos that are used to create deep fakes do. For a computer program to produce a sentence or paragraph, it creates a system of predicting the next word in a sentence, based on use cases from a multitude of examples it is fed. This was a challenging task. To make it sound intelligent, answer a question, or follow a task – that is a new era – why we are here today. The development of Generative AI in text is the new frontier now. What allowed this to happen was the big jump was large language models (LLMs).

The technical breakthroughs behind Large Language Models (LLMs)

The conversation turned to the technical breakthrough that underpins the Large Language Model (LLM). It is the transformer model, developed by Google researchers in 2017, which trained small transformers. Later, Open AI, a Microsoft partner (Editor’s note: Microsoft owns 49% of OpenAI) [Tekopedia], ventured into training larger transformers like the ChatGPT models. [Editor’s note: GPT stands for: Generative Pre-trained Transformer). This chatbot was developed by OpenAI and launched on November 30, 2022. [1] Wikipedia [Editor’s note: Transformers allowed words to be “understood” contextually by testing words in the context of many other words. Read: Generative AI Exists because of the Transformer,” Financial Times, September 11, 2023].

What is next: the opportunities and challenges ahead

At this meeting, July 2023, it had only been six months since the launch of ChatGPT. “It is early – 6 months – since our socks were knocked off by ChatGPT,” as one panelist put it. At that time, the panelists agreed they had already seen some of the possible applications and challenges of Generative AI playing out. Their discussion included the following:

Opportunities: Positive applications of generative AI in higher education

The panelists began with successes from traditional AI over the past two decades. America reminded us that traditional AI has been used to measure student success and has been in admin for operational efficiencies, for example, to target marketing for enrollment or to process transcripts automatically. The new areas being opened by generative AI include:

- Student success in terms of personalized, accessible content creation and tutoring: Generative AI can enable a personalized experience to help students understand new concepts more effectively. The panelists envisioned personalized educational content, curricula, instructional materials, and tutoring based on each student and their individualized needs to make content more accessible. It can even adapt instructional materials based on a student’s past academic performance, offering more support in areas where they struggle and less in areas of strength. This can reduce costs and enhance scalability dramatically.

- Research advancements: Generative AI can aid in academic research by generating synthetic data and images, particularly in healthcare research. This will help improve model accuracy and facilitate research. For example, creating variations of tumors for better model training is possible, thanks to this technology.

- In health care, researchers can generate artificial (synthetic) images that will detect tiny variations of a tumor, for example, to strengthen the models used to diagnose these tumors.

Challenges of generative AI in higher education

- Plagiarism and academic integrity: The ease of content generation with generative AI raises concerns about detecting plagiarism and maintaining academic integrity. Educators may struggle to differentiate between AI-generated and student-authored work. The panelists considered a world where students could skip the step of learning skills like writing. (They reminded us that penmanship was replaced by typing.) They also discussed the challenge of knowing when a student-generated the content independently. (One audience member pointed out that this would be a challenge unless we watched each student write their work. This person alluded to a reliance on integrity that has existed for many years when, for example, reviewing students’ applications.)

- Intellectual property: The university creates significant original, important content. We would not want to feed this into a public large language model without guaranteeing confidentiality. Other users could borrow or monetize that data without attribution. While there was little time to unpack this challenge, they added it to the list.

- Supervision and quality assurance: Generative AI is a powerful tool but requires constant supervision to ensure that the response is trustworthy. Users tend to believe that the model generates something true. People need to be educated to understand that generative AI results are based on data and models, and either of these may be incorrect or biased.

- In some cases, the result is inaccurate. When the result is not true to the source content or does not make sense for another reason, it is called a “hallucination problem.” Scaling helps reduce this tendency.

Recommendations for effective and safe deployment of generative AI

AI represents a powerful set of tools that can positively change higher education if we use them correctly. The three speakers introduced concepts to do so:

- Responsible AI: We must establish a framework for documenting/explaining AI models and their decision-making processes to enhance transparency. We also need to understand that data leads us to decisions. Ensuring data inspection and bias mitigation is extremely important. (Americo Carvalho)

- Data dignity in AI: Credit and responsibility should go to those who influence AI models. This approach aims to increase transparency and accountability in AI development, letting us know who contributed which data. (Jaron Lanier)

- Do no harm: AI Principles such as “do no harm,” which Google established seven years ago, still hold importance. This requires active participation from everyone, including members of the UC community and other leaders, to address AI-related challenges and potential harm. (Pratap Ramamurthy)

Background on the Event

Note: [Editor’s Note: As part of an artificial intelligence (AI) conference series, UC Tech News is covering UC AI conferences and major meetings we encourage to provide a comprehensive understanding of the evolution of AI within the UC system. The invitation-only event described here was held at the UC Office of the President in July 2023 for 30 university leaders and represents the first in the series. We look forward to covering several other key events that have taken place since then and welcome you to cover other events and share your impressions in this newsletter as well.]

Moderator

Mark Nitzberg

Head of Strategic Outreach

Berkeley Artificial Intelligence Research

UC Berkeley

Panelists

Jaron Lanier

Prime Scientist

Microsoft

Americo Carvalho

Head AI ML, World Wide Public Sector

Amazon Web Services

Pratap Ramamurthy

AI ML Customer Engineer

Google

Authors

Camille Crittenden

Executive Director

CITRIS and the Banatao Institute

Laurel Skurko

Marketing & communications

UC Office of the President

Brendon Phuong

Marketing & communications Intern

UC Office of the President