By Josh Baxt. Go to any jazz club and watch the musicians. Their performances are dynamic and improvisational; they’re inventing as they go along, having entire conversations through their instruments. Can we give computers the same capabilities?

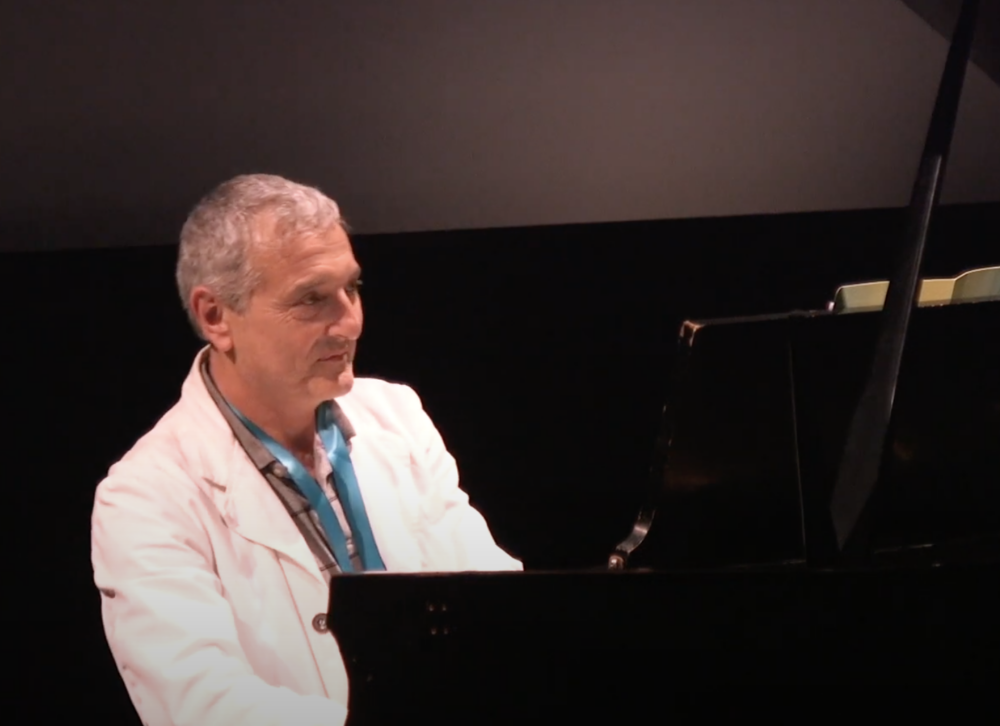

To answer that question, University of California San Diego Professor Shlomo Dubnov, who has appointments in the departments of Music and Computer Science and Engineering and UC San Diego’s Qualcomm Institute, and Gérard Assayag, a researcher at the Institute for Research and Coordination in Acoustics/Music in Paris, are engaged in an ambitious collaborative research program supported by a €2.4 million (around $2.8 million) European Research Council Advanced Grant.

Dubnov and Assayag will be working with other international partners, including Marc Chemillier from Ecole des Hautes Etudes en Sciences Sociales (EHESS), on Project REACH: Raising Co-creativity in Cyber-Human Musicianship, which is teaching computers how to improvise, musically.

The Conversation

Almost all human interactions are improvisational, with each party modifying their responses based on real-time feedback. But computers, even with the most sophisticated AI, are constrained by their programming. And while chatbots and other online tools are getting better at conversing fluidly, they can hardly improvise on the fly.

“There are various machine learning applications today to create art,” said Dubnov. “The question is: Can we go beyond these tools and generate some sort of autonomous creativity?”

In this scenario, computers would do more than learn musical styles and replicate them. They would innovate on their own, produce longer and more complex sounds and make their own decisions based on the feedback they are receiving from other musicians.

“Not only should the human musician be interested in what the machine is doing; the machine should be interested in what the person is doing,” said Dubnov. “It needs to be able to analyze what’s happening and decide when it’s going to improvise with its human partners and when it’s going to improvise on its own. It needs agency.”

The Struggle to Define Music

The REACH project falls broadly under reinforcement learning, a form of machine learning that gives computers a sense of reward to incentivize them; however, that requires qualitative labels. For example: What makes music good?

In other words, REACH needs to teach computers an aesthetic, something humans don’t fully comprehend. Musically, what is good or bad? What makes Miles Davis’s Kind of Blue, Beethoven’s Ninth Symphony and the Beatles’ Abbey Road so engaging?

“If we produce sounds with the intent to make music, that is music,” said Dubnov. “That means that, to become musical, the computer must have its own intent.”

In a sense, the project is all about self-discovery. By teaching machines how to improvise well, the REACH group will be inventing more precise definitions of music for themselves. This all circles back to the methods behind reinforcement learning. The team will have to teach computers to make good choices.

“That will be our greatest scientific challenge,” said Dubnov. “How do we give the machine intrinsic motivation when it’s so difficult to specify what constitutes good?”

This article originally appeared in UC San Diego Newscenter, January 13, 2022, and is re-posted with permission in the UC IT Blog.