By Sean Peisert, Karen Stocks, and Von Welch. Cyber attacks don’t just hit big corporations or governments or hospitals. Anything connected to networks is vulnerable, and that includes research and open science.

In general, scientists who use computers and other devices plugged into computer networks are aware of cyber threats. Often though they have little knowledge about the specific threats to systems like theirs, what to do about them, or the degree to which these threats target open science projects.

We developed the Open Source Cyber Risk Profile (OSCRP), an online framework to help scientists understand how their research might be affected by cyber attacks, even if they are not the target but simply collateral damage. It also helps scientists learn how to talk with information security personnel at their institutions about risks and the best ways to mitigate them.

To launch the project, Von Welch, director of the Center for Applied Cybersecurity Research at Indiana University and the NSF Cybersecurity Center of Excellence, and Sean Peisert at the Berkeley Lab, CENIC, ESnet, and UC Davis, put together a group of computer security professionals and domain scientists in 2016. We realized from the outset that if it was just a group of computer security folks, we wouldn’t know how to connect with scientists. So we included scientists who could provide that connection along with real examples of science at risk. Our goal was for the framework document to be a source of insight and translation for scientists about cyber risk.

The group received detailed presentations about science projects involving everything from high-energy particle physics to computer-networked ocean sensors. These helped us understand the ways in which cyber threats could impact those projects, as well as the range of solutions that could help mitigate those threats from both a social and technical perspective.

Both the scientists and cybersecurity professionals initially felt that data servers were likely to be the most important systems to protect. After learning about the subtleties and nuances of science projects, all of us were surprised when we realized how many scientific instruments – from sensors, to radio telescopes, to freezers containing biological samples – are exposed to cyber risks via computer networks. We were struck by the sheer number of network-connected systems that aren’t traditional computers. These devices may be directly network connected, or may rely on electrical power systems that are controlled over computer networks.

Scientists in the group initially felt that most of their risk would be due to “targeted” attacks, and questioned whether anyone would target an “open” science project. Through our discussions, we discovered that frequently cyber risks are not targeted but are due to collateral damage from broad, untargeted attacks. So it’s critical to protect open science projects too.

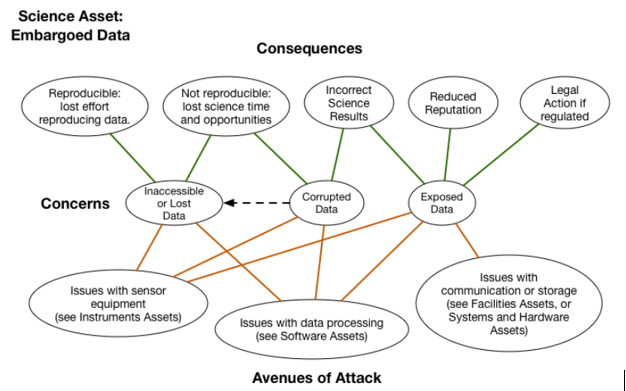

The OSCRP framework is largely divided into two primary components: The first section outlines “bad things” that can happen to “good science” as a result of cyber risks. The second section illustrates how those “bad things” can manifest themselves and what the impact on the science itself might be. Impacts are broken out by the type of “science asset,” such as various kinds of data, facilities, software, hardware, scientific instruments, and even “human” scientific assets.

All this detail is aimed at helping scientists better communicate with information security personnel at their institutions about potential risks to their work and the best ways to mitigate them. While some scientists and IT staff may be capable of mitigating attacks themselves, we believe it is important as to provide assistance to scientists who don’t have in-house expertise.

Already the framework is having an impact. Richard LeDuc, director of Computational Proteomics at the Proteomics Center of Excellence at Northwestern University, and a core member of the OSCRP Working Group, said, “We already had an excellent working relationship with our institution’s IT units, but even so, using the OSCRP helped us find holes in our understanding of who is responsible for what.”

Creating the framework required a significant and diverse set of expertise. In addition to the three of us, Andrew Adams at the Pittsburgh Supercomputing Center, Michael Dopheide from ESnet, and Susan Sons from Indiana University all helped co-organize the effort. Numerous others from UCSD, Caltech, Northwestern, Harvard, and other institutions contributed both security expertise, as well as science use cases, insights, and perspectives on how to frame some of the concepts so they would resonate with scientists.

We hope that the OSCRP not only is read and embraced by scientists in a way that helps them have conversations with their campus IT security teams, but also is extended and continued by scientists. We would love to field questions from scientists or IP security pros about the framework, and also would love any feedback about how the document is or isn’t useful. In addition, we’d love to see the community jump in and contribute new examples, as well. People may provide public feedback and ask questions by submitting issues on the OSCRP feedback page.

The computational instruments of scientific discovery and the threats to computing systems are both in a state of almost daily change, and since the risk will not disappear any time soon, the need for understanding and mitigation of such risks will not disappear either.

For more information about the framework read, “Mind the gap: Speaking like a cybersecurity pro,” ScienceNode, February 10, 2017.

![]() Sean Peisert is a staff scientist at Lawrence Berkeley National Laboratory; chief cybersecurity strategist at CENIC; and an associate adjunct professor at UC Davis.

Sean Peisert is a staff scientist at Lawrence Berkeley National Laboratory; chief cybersecurity strategist at CENIC; and an associate adjunct professor at UC Davis.

Karen Stocks is the director of the Geological Data Center at Scripps Institution of Oceanography at UC San Diego.

Karen Stocks is the director of the Geological Data Center at Scripps Institution of Oceanography at UC San Diego.

Von Welch is director of the Center for Applied Cybersecurity Research and the NSF Cybersecurity Center of Excellence at Indiana University.

Von Welch is director of the Center for Applied Cybersecurity Research and the NSF Cybersecurity Center of Excellence at Indiana University.